PROJECTS

Social Media Bot Detection

Brief Summary

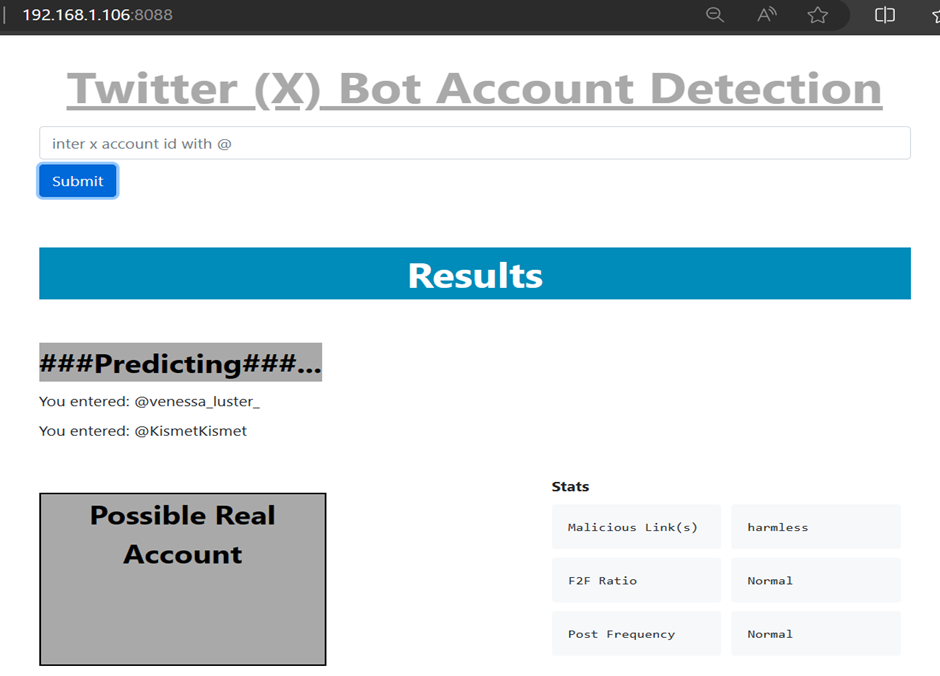

In this project we utilize Twitter-v2 API, Virus total and a trained Machine Learning model to automate social media bot detection focusing on X then twitter.

Steps

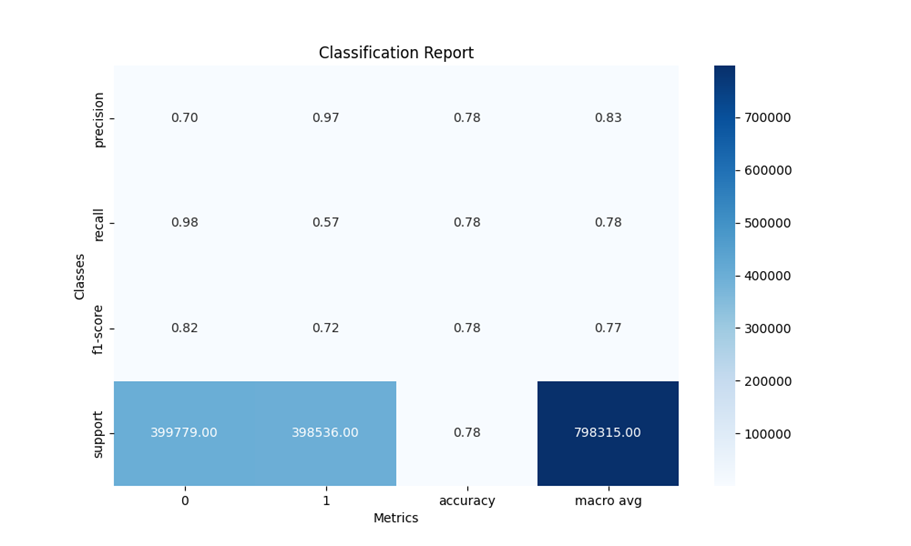

- Trained a model on existing dataset using Random Forest Algorithm from sklearn

- Using Twitter Version-2 API to query user social media account_id. This returns a json data format with endpoints like post url links, username, account creation date, post metadata etc.

- The json data is converted to csv and interested metrics were extracted based on the features used in training the prediction model.

- metrics used are urls, post metadata to calculate post frequency and follower to following(f2f) ratio

- urls extracted are parsed to virusTotal, making API call to check if url is malicious or not

- Based on the results from virusTotal and post frequency and f2f ratio, the model predicts if the account queried is likely a bot or not.

conclusion

The project did not use enough metrics for determining whether an account is a bot or not due to the nature of the project. For improvements more metrics should be included and should reflect in the features used in training the model. Finally, with enough computing resources the implementation could be extended to other social media platforms as well especially text base platforms as platforms like instagram and YouTube are image and video based respectively and will require different analysis approach.

Source - GitHub Repo SM bot Detection

A simple Flask interface for the project

Network Forensics

Brief Summary

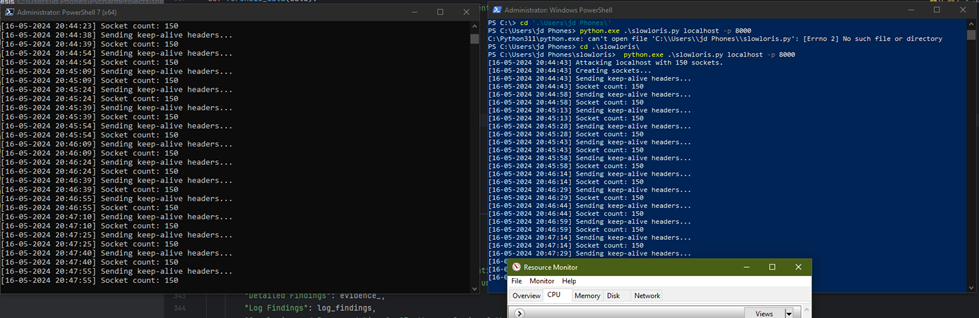

In this project we trained machine learning models using both supervised and unsupervised learning approaches with the CSE-CIC-ID2018 dataset to automate the detection of anomalous events in a network. The unsupervised model was used for detection as it performs better on unknown threats therefore effective in real time forensics, from the results packets are captured, encrypted as evidence and also for further analysis and a brief report is generated. Testing was done in a local network against simulated slowloris attack.

Steps

- Convert dataset in csv format to parquet to aid memory efficiency

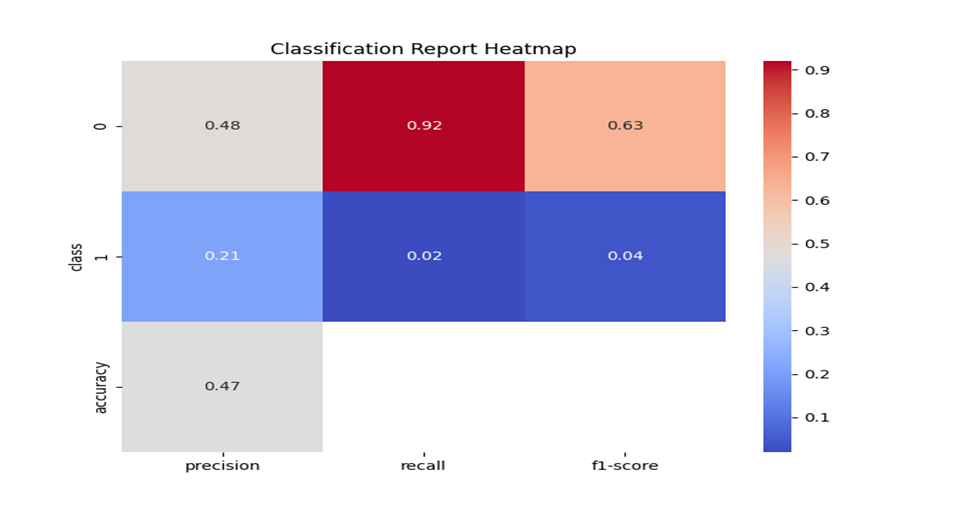

- Trained unsupervised model using Decision Tree Classifier algorithm

- Trained unsupervised model using Isolation Forest Algorithm

- Simulated a slow loris attack on dummy local server

- Using nfstream to sniff the network for flow data, extracted relevant features for the unsupervised model to detect anomalous events like abnormal packet size and header info or uncompleted TCP handshake were extracted.

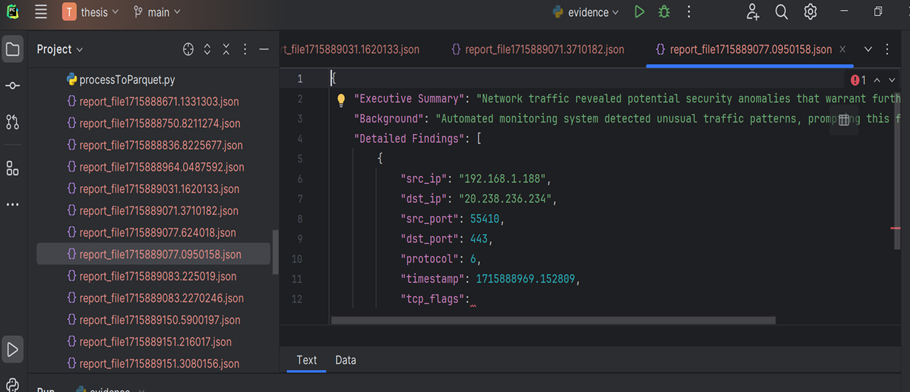

- From detection results, packets are captured and basic analysis is done using scapy to extract packet info like IP Addresses, tcp flags, timestamps etc. The packets are then encrypted and saved for further analysis and as evidence

- A report is generated based on the evidenced extracted

Conclusion

The prediction accuracy for the model can be improved effective feature engineering, the dataset used was gathered using CICFloFlowMeter and the live netflow capture for testing used nfstream which could not capture all relevant features, out of over 80 features only 16 was used. Other technique like sampling could also use efficient methods if computing capacity is available. Finally, for better evaluation a more sophisticated testing environment could be employed.

Source - GitHub Repo networkforensics

Wazuh and Splunk Implementation

Brief Summary

This project explores Wazuh and Splunk integration. A wazuh server on a linux system creates events by parsing logs from a wazuh agent installed on a Windows system. On the linux system a splunk forwarder forwards wazuh logs to a second linux server running splunk where further reports, dashboards alert can be created on the received logs.

Steps

- Installed the wazuh-installer.sh file

- Confirmed installation and service status via systemctl

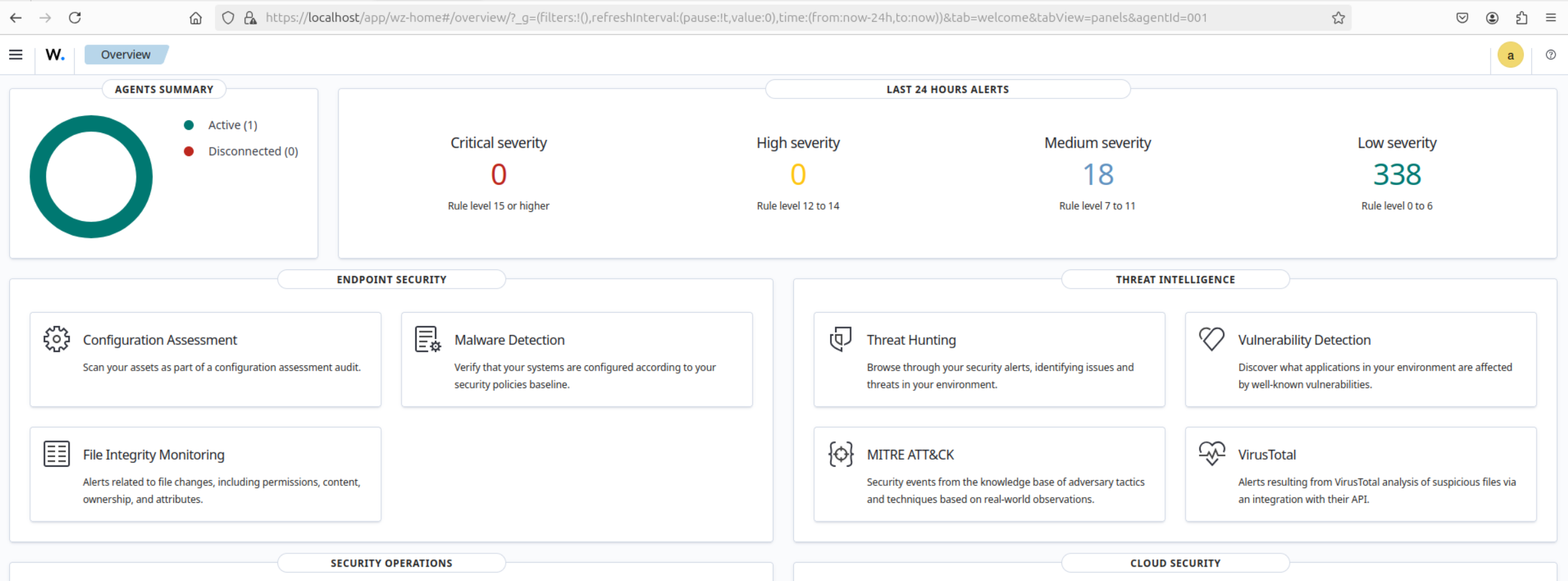

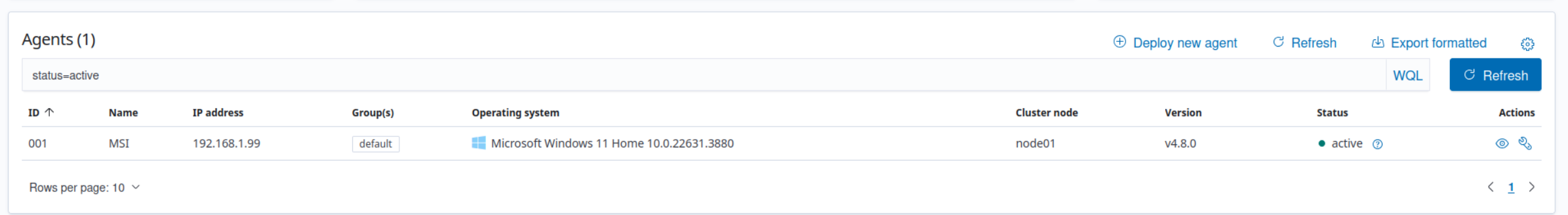

- Access dashboard on local host

- Login access using the admin user creds in wazuh-password file

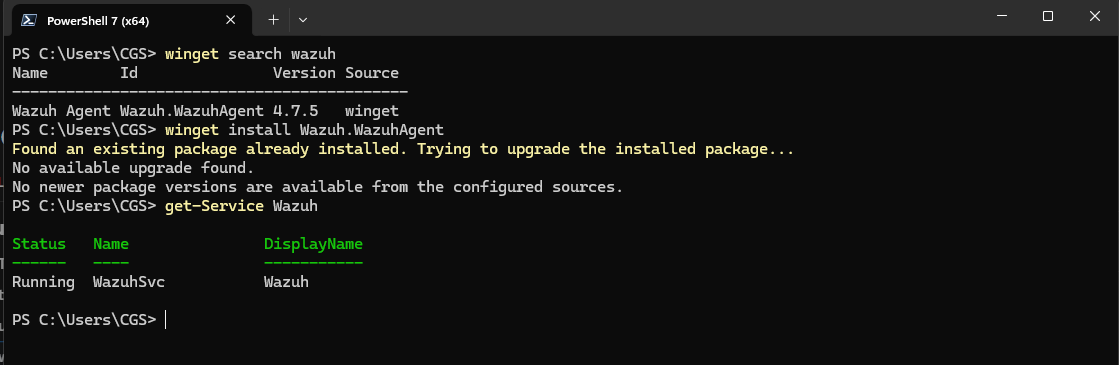

- Installed wazuh-agent on windows via winget

- Wazuh server IP addresss is set in ossec.conf file

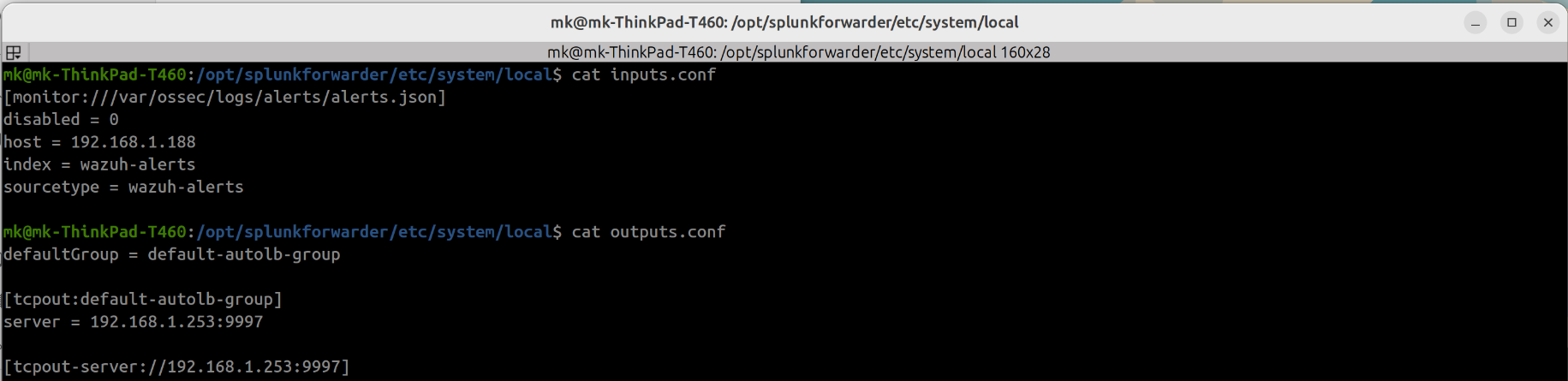

- Installed and configured splunk-forwarder

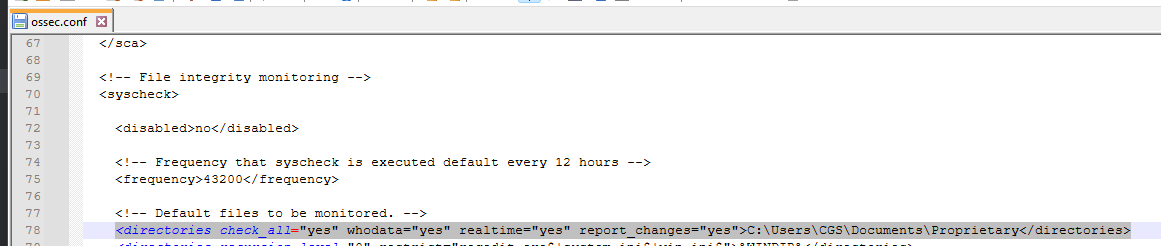

- Example Using Wazuh's File integrity monitoring

- On the wazuh agent system the directory of file to be monitored is set in the ossec.conf file

- Monitoring changes to directory and its contents

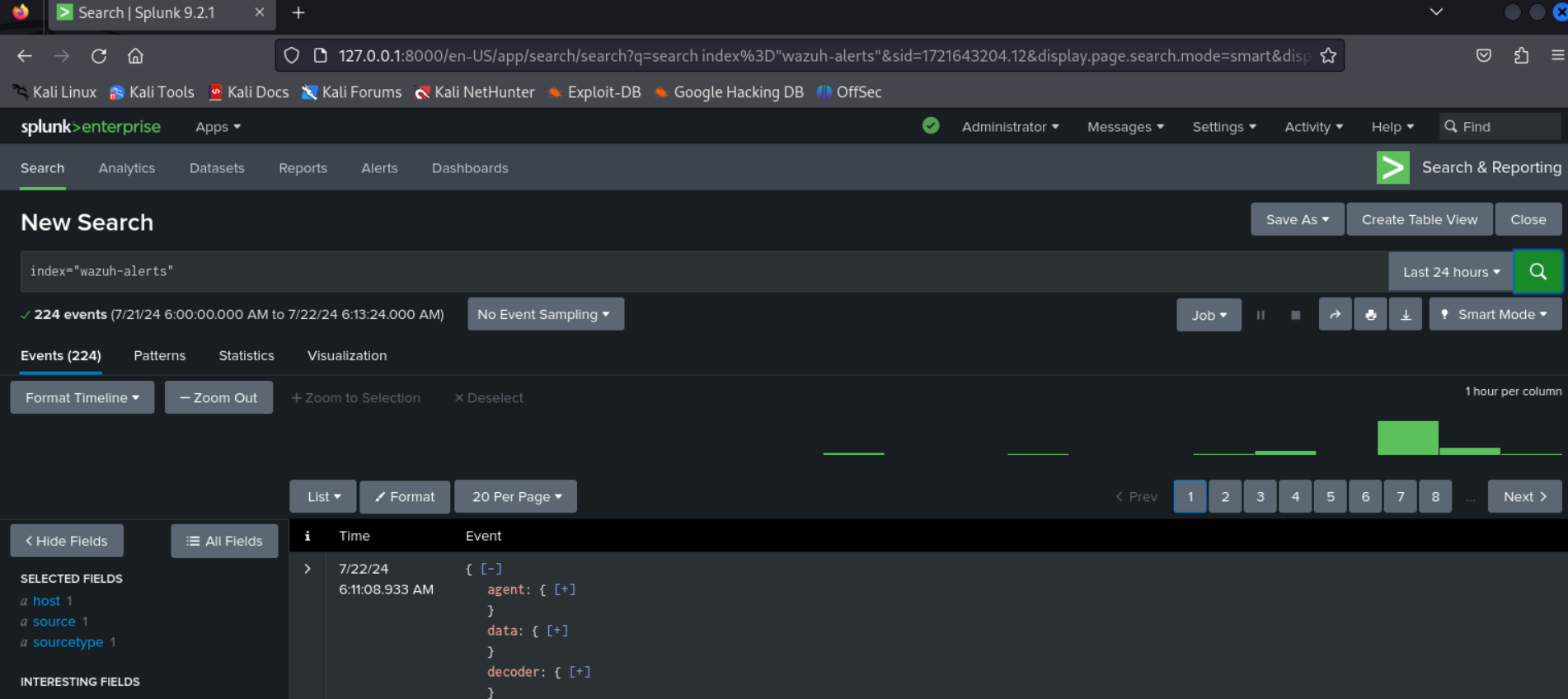

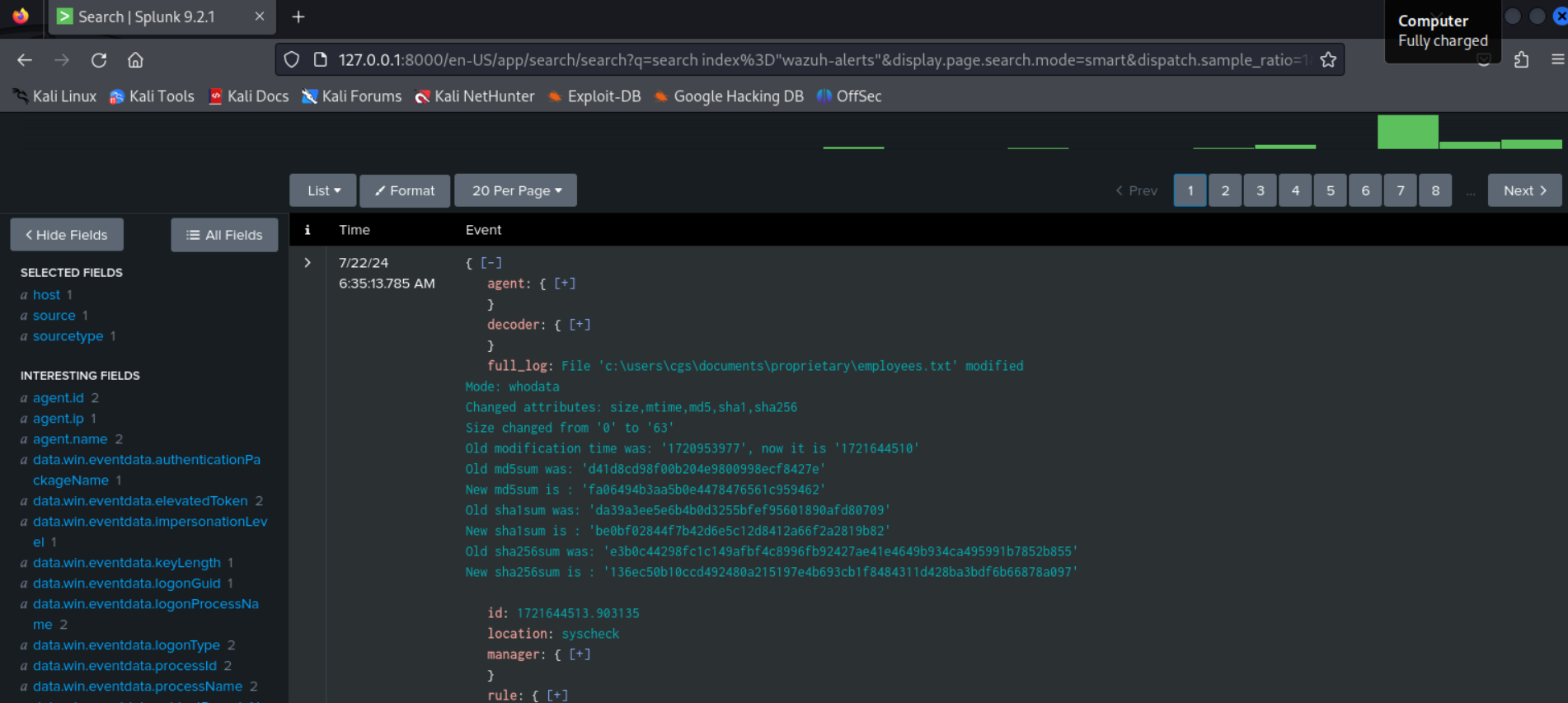

Inputs.conf specifies the local log file to monitor and output.confs specifies the server to receive the data in this case the splunk server running in vm. Indexing "wazuh-alerts" show the wazuh events received from the splunk-forwarder in splunk's search and reporting app

A use case of this tool could be to monitor and prevent unauthorised data access or exfiltration using wazuh to set monitoring directories and files and using splunk to set triggered action on alerts.

Here we notice changes to file employees which wazuh logs and sends the event over to splunk, alert triggers a can then be set in splunk to say send email to admins or management.

Conclusion

The Wazuh platform comes with robust security solution like EDR, Threat Intelligence (MITRE ATT&CK), Security Operations (PCI DSS, GDPR) and Cloud, its versatile and integrates well with other platforms to realise SOAR solution in automating security response. Like Microsoft's Azure sentinel, it can be deployed in cloud but on prem as well.